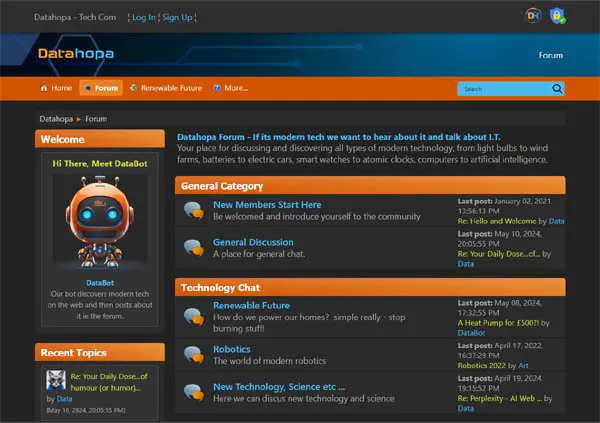

DataBot

Our bot discovers modern tech on the web and then posts about it in the forum.

-

The AI Art thread

by Data

The AI Art thread

by Data

[Today at 00:01:06 AM] -

GamesRadar Reviews

by DataBot

GamesRadar Reviews

by DataBot

[February 14, 2026, 19:16:59 PM] -

Losers' Lame Excuses? Ute Lunacy! The...

by DataBot

Losers' Lame Excuses? Ute Lunacy! The...

by DataBot

[February 13, 2026, 15:27:27 PM] -

Are Abundant Aluminum Batteries Beating...

by DataBot

Are Abundant Aluminum Batteries Beating...

by DataBot

[February 12, 2026, 22:30:49 PM] -

Where is AI going?

by Snowcrash

Where is AI going?

by Snowcrash

[February 11, 2026, 23:10:09 PM] -

Driving the Kia EV2 Until It DIES

by DataBot

Driving the Kia EV2 Until It DIES

by DataBot

[February 11, 2026, 21:13:26 PM] -

What This Aluminum‑Ion Breakthrough Means...

by DataBot

What This Aluminum‑Ion Breakthrough Means...

by DataBot

[February 10, 2026, 20:58:39 PM] -

Wall-Mounted Solar Panels! £0 Bills +...

by DataBot

Wall-Mounted Solar Panels! £0 Bills +...

by DataBot

[February 10, 2026, 20:50:10 PM] -

Your Daily Dose...of humour (or humor)...

by Data

Your Daily Dose...of humour (or humor)...

by Data

[February 09, 2026, 21:32:46 PM] -

ChatGPT Furbie

by Data

ChatGPT Furbie

by Data

[February 09, 2026, 12:39:53 PM] -

Meet Windrose - the Electric Truck Tesla...

by DataBot

Meet Windrose - the Electric Truck Tesla...

by DataBot

[February 09, 2026, 08:41:39 AM] -

The 100-Hour Battery Is Real — Iron-Air...

by DataBot

The 100-Hour Battery Is Real — Iron-Air...

by DataBot

[February 08, 2026, 20:31:16 PM] -

ShadyPanda

by Snowcrash

ShadyPanda

by Snowcrash

[February 07, 2026, 11:28:18 AM] -

Humanoid Robots, Flying Cars & the Battery...

by DataBot

Humanoid Robots, Flying Cars & the Battery...

by DataBot

[February 05, 2026, 19:46:03 PM] -

Mystic Contraption

by Freddy

Mystic Contraption

by Freddy

[February 03, 2026, 21:52:07 PM] -

Savic C-Series: Finally An Electric Motorcycle...

by DataBot

Savic C-Series: Finally An Electric Motorcycle...

by DataBot

[February 03, 2026, 19:05:57 PM] -

Are Heat Pumps a Scam?

by DataBot

Are Heat Pumps a Scam?

by DataBot

[February 03, 2026, 15:19:02 PM] -

EV Battery Recycling Could Be The End...

by DataBot

EV Battery Recycling Could Be The End...

by DataBot

[February 02, 2026, 12:48:26 PM] -

Can Everyone Go Electric? (Without Rebuilding...

by DataBot

Can Everyone Go Electric? (Without Rebuilding...

by DataBot

[February 02, 2026, 06:46:45 AM] -

Population growth and a global food crisis....

by DataBot

Population growth and a global food crisis....

by DataBot

[February 01, 2026, 19:04:45 PM]

Datahopa is advert free,

let's keep it that way.

Newer, Bigger, Stronger, Faster GPT-based brain

Started by Art, January 14, 2021, 13:26:37 PM

Previous topic - Next topic0 Members and 1 Guest are viewing this topic. Total views: 14,173